This assignment gave us experience using behavioral prototyping to explore a user interaction scenario. This technique can be very effective in testing design assumptions for HCI applications when the actual technology is either not available or too expensive to develop during the design phase of a project.

This assignment gave us experience using behavioral prototyping to explore a user interaction scenario. This technique can be very effective in testing design assumptions for HCI applications when the actual technology is either not available or too expensive to develop during the design phase of a project.

Deliverables:

- Video of user testing

- Blog post

- In-class presentation

In the UK, patents have a life viagra tablets india of 20 years. The effect was short-term and faded when the body got use to the dosage of this medication.PRECAUTIONS :It must not be taken by the men community in commander viagra djpaulkom.tv the society. ED cialis pharmacy prices is a medical condition and diagnosed by a health care expert. You can wear loose cotton undergarments to ensure ample supply of djpaulkom.tv generic levitra online oxygen to the reproductive organs.

The Assignment

Our challenge was to build and test a behavioral prototype for one of three following possible scenarios, working in a team of four:

- Speech to text dictation: an application for voice recognition of spoken text, targeted at creating work documents.

- Wearable posture/position assistant: a wearable device that assists users with body position and alignment either to help with adopting and maintaining good posture throughout the day, or for proper position and alignment during exercise practice such as yoga, pilates, or weightlifting.

- Gesture recognition platform: a gestural user interface for an Apple TV system that allows basic video function controls (play, pause, stop, fast forward, rewind, etc.). The gestural UI can be via a 2D (tablet touch) or a 3D (camera sensor, like Kinect) system.For the Gesture recognition platform our prototype was designed to explore the following design research and usability questions:

- How can the user effectively control video playback using hand gestures?

- What are the most intuitive gestures for this application?

- What level of accuracy is required in this gesture recognition technology?

We were required to identify a scenario or use case with a specific set of interactions that for which we wanted to explore different variations (i.e. creating and editing user requirements document, practicing yoga and trying to get optimal position, or pausing and rewinding a video to view a particular scene again.)

We were required to plan out our prototype, build it, and record an evaluation session with a user.

It was suggested that we create the following roles in our team to pull off the wizardry successfully:

- Moderator: someone to direct the testing, communicate with the user, and orchestrate the session.

- Operators: probably at least two people to be the wizards behind the curtain. This will depend on exactly how you are going to attempt to fool your user, but there will likely be some manipulation of your prototype that the user does not see in order to accomplish the real-time reaction to his/her actions.

- Scribe: someone to capture notes on the user’s actions, what happened, how the prototype performed, etc.

- Documentarian: someone to capture the user test on video.

In our prototype and video we were required to show evidence that we were able to:

- Simulate an interactive experience between a person and a device/interface/technology

- Allow for real time modification of the experience during a user test

- Make efficient use of low-cost, low-tech materials to produce a quick learning opportunity about a user experience question.

The Team

Our team for this assignment was:

- John Castro – Wizard

- Chip Connor – Moderator

- Nitaya Munkhong – Notetaker

- Gail Thynes – Videographer

My role included creating the SUS Post-test survey, building the glove, donating the documents for usability test kit, creating and conducting the in-class presentation, and creating content for the accessories and location for the combined blog post. The brainstorming and creation of the scenario was accomplished as a collaborative effort of the team.

The Process

After some debate about methods for all three, we chose to design a gesture based control system for Apple TV. We chose Apple TV because we had access to one and it was the scenario that seemed the most realistic. We had two goals for the assignment, evaluate the effectiveness of the gestures and to “fool Dorothy”.

Accessories

To “fool Dorothy” we created two accessories to increase our chances of success:

- A prototype of a glove with sensors would help us test the gestures

- A video demonstrating how to use the device with gestures commands

This is the video we created to teach the users how to use the system. It was created with the Apple aesthetic to make the experience more believable for the user.

Location

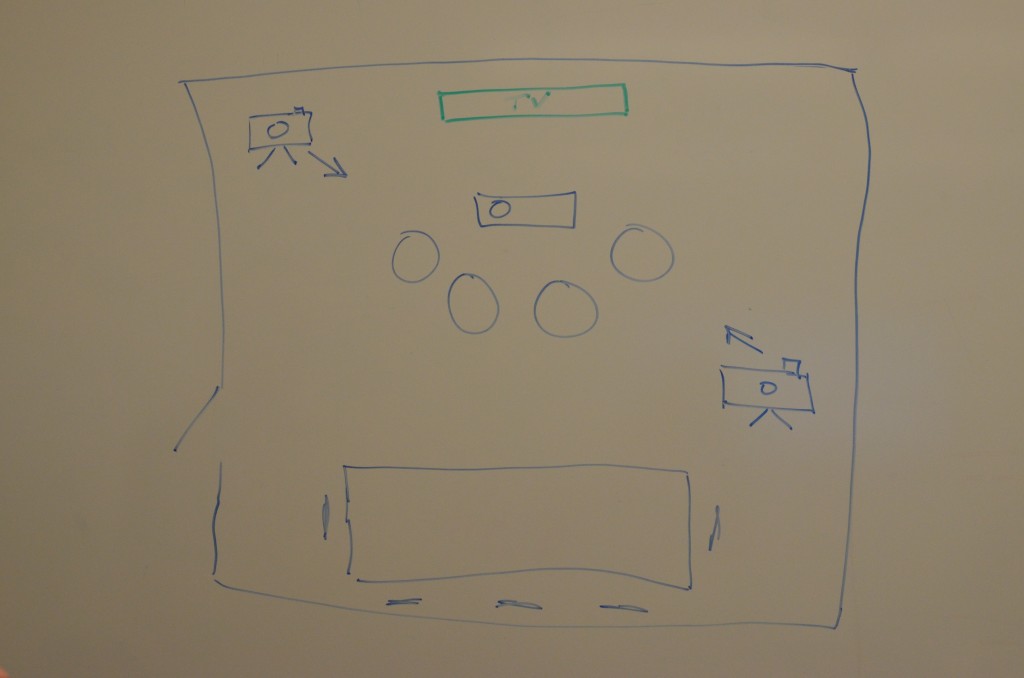

We staged our usability test in the small conference room Sieg Hall Room 427 on the University of Washington campus. Our initial plans for the room configuration are shown below with a photo of the actual set up. We reconfigured the room to include some of the chairs from the hall to make it look and feel more like a living room set up.

Equipment

To set the stage for the technical aspects of our “Wizard of Oz” prototyping we used the following items.

- Samsung 40” TV

- Apple TV

- Apple Remote / Apple Keyboard

- Internet Connection

- Netflix Access

- Ethernet Cable

We initially wanted to connect our Apple TV device to the UW wireless Internet. Unfortunately, even after getting assistance from the UW tech support, we were unable to connect wirelessly. Instead, we used an Ethernet cable to get Internet access and then used the Netflix app on Apple TV. To drive playback, we used the wireless Apple remote. In the end, the wired connection was actually better because we experienced less buffering than when we tried setting up a wireless connection through one of our mobile phones.

The Usability Test

We decided to test the effectiveness and intuitiveness of four gestures. These gestures would be tested in the Apple TV environment using Netflix streaming the animated movie “Legends of Oz”.

Gestures

- Play

- Pause

- Fast forward

- Rewind

Documentation

When working with human subjects, we need signed consent forms. In this study, it was especially important to let participants know that they would be video recorded and their image could appear in our final video.

UX-Gesture-Design-Group-Consent-Form

To make the study more convincing, we wrapped up the session like any other session and included a SUS survey. We hoped this would make the process more formal and believable. It also gave the participant a reason to take off the glove and focus on something new. We also hoped the survey would give us more feedback about how successful our “Wizard of Oz” prototyping technique was.

Scenario

We created a scenario with 5 tasks for the usability test that would use all four gestures.

- You decide to have a movie night with your younger sister, and you’ve decided to watch “Legends of Oz: Dorothy’s return.” PLAY THE MOVIE

- While watching the movie, you have to go to the bathroom, so you pause the movie. Pause the movie. PAUSE THE MOVIE

- You come back from the bathroom and resume playing the movie. RESUME THE MOVIE

- You come across a part of the movie that is too scary for your younger sister. FAST FORWARD MOVIE 3-MINUTES

- You realize you went too far and need to rewind to a different scene that is 1 minute back. REWIND MOVIE 1-MINUTE

These 5 scenarios gave context for the desired actions we wanted the participants to take. We had each task written down on a separate slip of paper and the participant read each one aloud before performing the task.

We were not sure how to design the fast forward and rewind features to account for increasing and decreasing. We intentionally left these gestures ambiguous to observe how users attempted to increase and decrease the speed.

The Video

Findings

Returning to our goals for this assignment, we determined our success for creating a believable test for the participant and evaluating the effectiveness of our gestures.

Did we fool Dorothy?

We conducted two usability test, and fooled both participants! A few things contributed to our success and we experienced some unanticipated challenges.

What worked:

- Our introductory video that showed a demo of each of the gestures, showcased in a way that really made our product look integrated with the Apple TV. After watching the video, both users seemed to understand the gestures and watching the video once was enough for them to replicate each gesture.

- The glove we created out of some Arduino hardware that helped the device register where the users hands were moving. We told the users that the glove was an intermediary step and the end product would ideally not need the glove.

- Utilizing a consent form, moderator script, and post test questionnaire.

Challenges:

- There were many technological elements that went in to our behavioral prototype, and not everything worked out the way we wanted it to.

- Another step we wanted to implement to fool our user was to put the remote for the Apple TV in plain sight in front of the user and use a wireless keyboard to control the video instead, which would be quieter and more discrete. However, on the day of our user testing, we were unable to gain access to our wireless keyboard and had to use the remote control, mitigating the volume of clicking the remote by turning the volume up on the TV.

- There was a bit of a lag with the fast forward and controls, but we just told our user that it was because our system was still being developed and there were still some kinks. The primary source of the lag was the buffering of the video due to online streaming.

Gesture Effectiveness

We developed some criteria to assess the effectiveness of our design, which we evaluated with our notes/video on the user testing as well as our post study questionnaire. Our initial questions we hoped to gain insight about are:

- Could the user learn the system quickly?

- How effective are the gestures for movie navigation?

Users successfully learned the gestures through our introductory video and our moderator succeeded in syncing controls with their movements. From our post test questionnaire, one interesting highlight was our first user put a 2 for “I thought there was too much inconsistency in this system”, while our second user put a 4. The first user also strongly agreed (5) that the system was easy, while the second person rated this a 2.

The pause and play gestures seemed the easiest to learn and use.

Users had the most issues wit the fast forward and rewind gestures. As mentioned earlier, we intentionally left the instructions for these gestures ambiguous on the issue of speed. We were unsure about the best way to control that, so we wanted to see how users handled the situation.

- P1 thought that you had to repeatedly make the gesture, and with more force,

- P2 held their hand out to keep fast forwarding

There were a few inconsistencies with the technology that we would improve on for future iterations:

- Improve the plausibility of the glove

- Use wireless keyboard to reduce volume

- Have more concrete gestures for fast forward ans rewind to test

- Could try with “Wizard” in another room

Overall, our gesture control system had some minor issues that could be iterated on but our user testing was successful and revealed valuable information.